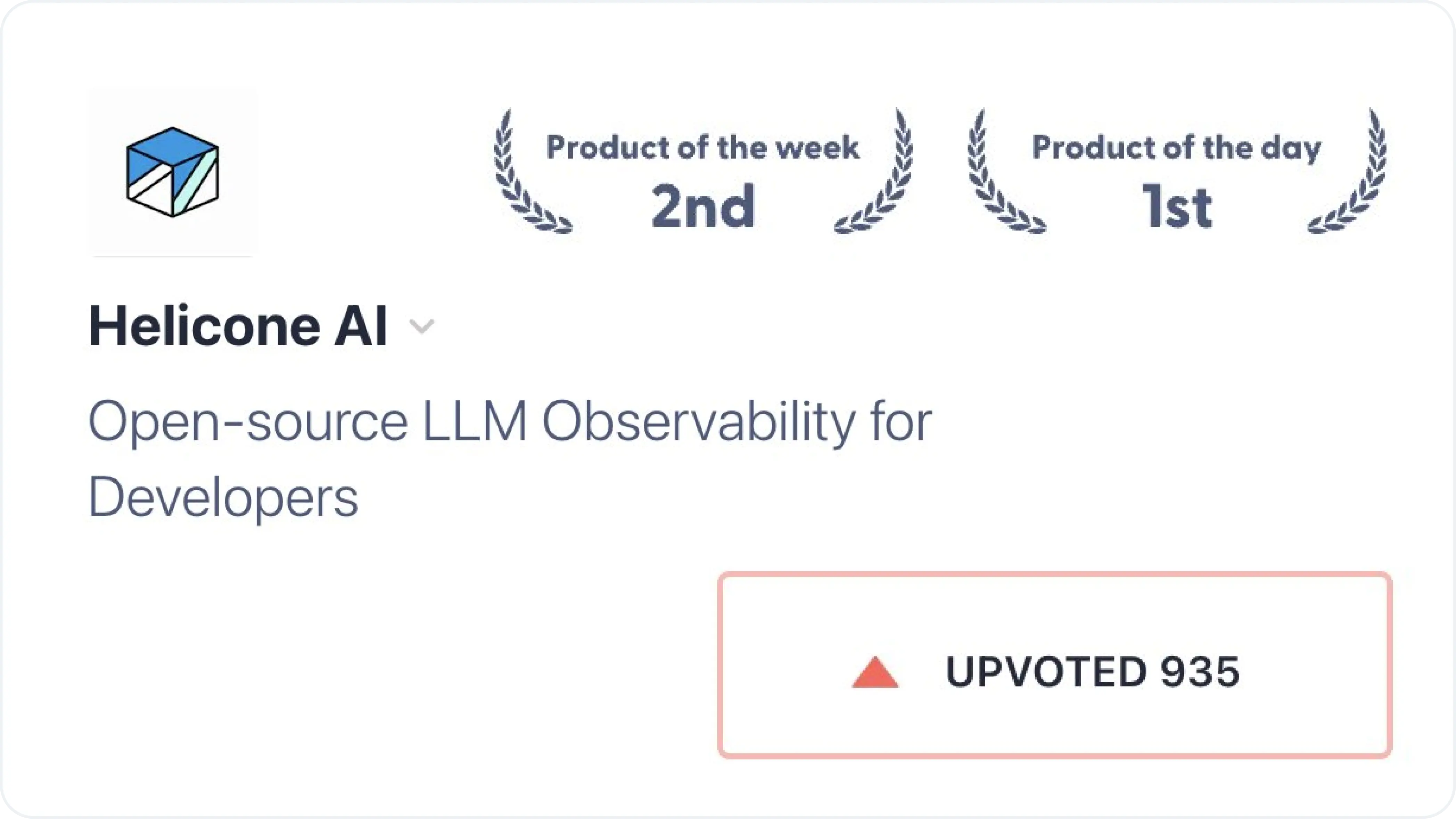

#1 Product of the Day on Product Hunt

Helicone Reaches #1 on Product Hunt!

This achievement reflects our team’s hard work and the incredible support from our community. We’re thrilled about the boost in visibility for our platform!

Highlights:

- #1 on Product Hunt’s daily leaderboard

- Positive feedback from the open-source community

- Surge in new user sign-ups and engagement

A huge thank you to everyone who upvoted, commented, and shared Helicone. Your support motivates us to keep improving!

For more on our Product Hunt journey, check out our blog posts:

- How to Automate a Product Hunt Launch - Lessons from Helicone’s Success

- Behind 900 pushups, lessons learned from being #1 Product of the Day

Links:

Product Hunt: Helicone on Product Hunt